Project: Suppliers

Create New Digger

Please note:

If you are working with a visual extractor, the project and digger creation process can be different. This section describes the creation of a project and a digger through the website only. If you are interested in how to do this through the extractor, please refer to the appropriate section.

Adding a digger to the project

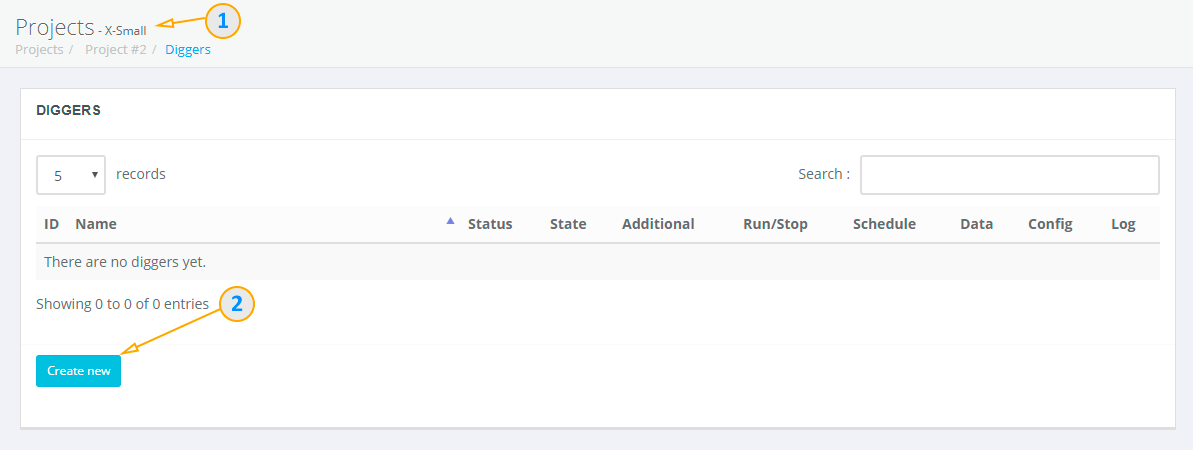

So, we created the Suppliers project and selected it in the navigation menu.

Now we can see a table of diggers, which belongs to the Suppliers project. Since we just

created a new project, there is no any digger in it yet.

- Current subscription plan

- Press this button to create a new digger

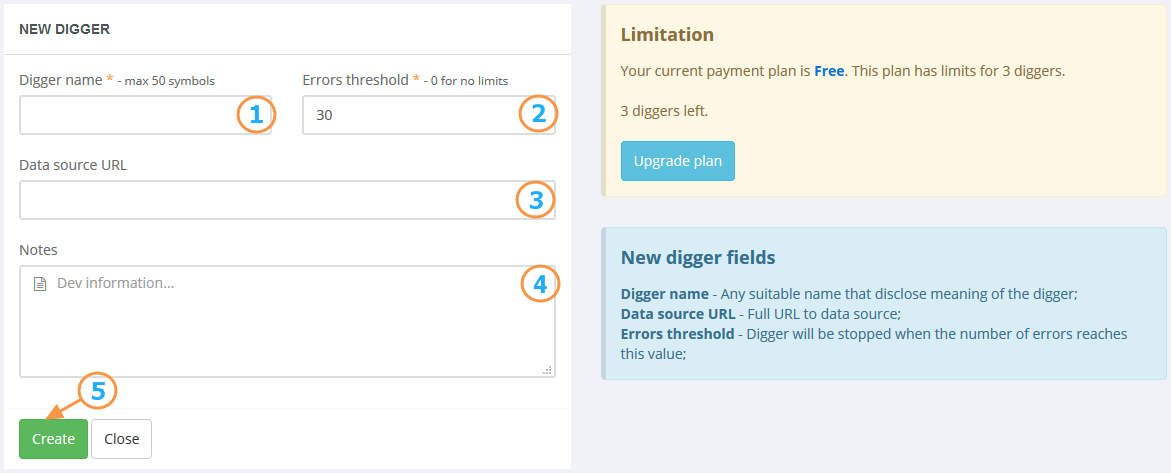

- Digger name

- Error threshold (default 30)

- URL of source website (optional)

- Notes (optional)

- Create digger

Digger name - any name for your digger (up to 50 chars). Eg you can use domain name as name for your digger (like domain.com). But we will use Supplier #1 as name for our first digger.

When the digger is running, two types of errors can occur:

- general errors

- data validation errors

The error threshold is the allowed number of general errors which happens during scraping process. When this limit is reached,

digger will be stopped. This is an important parameter that allows you to track the possible changes on the source site that can lead to incorrect

logic work of the digger. Usually, you develop a digger configuration using a meta-language or load it from the vasial extractor

and it works as required. But over time, the source site may get some software update, change the markup, layout, anything and your digger will not be

able to work properly, as pathes to elements will be different. So your digger will be useless and just spend your valuable resources without giving

any results to you. Its where error threshold comes very handy as it force stop digger if it works wrongly.

You can set the value of this field to 1 and then at the first occurrence of the error the digger will stop its work and will not spend resources

in vain, until you figure out what the error is and do not correct your configuration. Or you can turn off error control by setting this field to 0.

Sometimes it is not enough to control only the general errors when working with the source, but you also need control validity of the data.

In this case, we provide a very powerful and flexible tool for data checking in the form of JSON Schema Validation.

For more information about this mechanism, see the data validation section.

URL of source website - this field is optional, it is only necessary to indicate which website / URL the digger is working with. This is convenient when you have several diggers which works with the same site. In this case, you will be able to know exactly what part of site is scraped by this or that digger.

The field notes is intended primarily to describe the requirements to the developer, if you order the development of the script from third-party developers. But generally this field can be used for any relevant information regarding this digger or scraped website.

Type text "Supplier #1" to the digger name field, click on the create a digger button and let's see what we get.

Next